What is generative AI, and what does Oli use?

Let’s start with the fundamentals. Generative AI (or GenAI) means AI that can create things, like text, images, or music. In Oli’s case, it creates words. Word by word, it predicts what should come next based on a huge amount of training data. Think of it like a really well-read assistant that finishes your sentences.

The engine behind this is a type of GenAI called an LLM (Large Language Model). We use models from OpenAI, Anthropic, and Google. You’ve probably heard of ChatGPT, that’s one example.

These models are powerful, but we don’t control what’s inside them. Think of them like a car engine. A car won’t run without one, but on its own, it’s not much use for getting to work. You still need the rest of the car: steering, brakes, safety features, and a clear sense of direction. That’s what we build around the model. Guardrails to keep it on track. Specific behaviours based on real coaching methods. And human checks where needed. That’s how we’ve built Oli.

A Collection of Agents, Working Together

The Agentic Approach

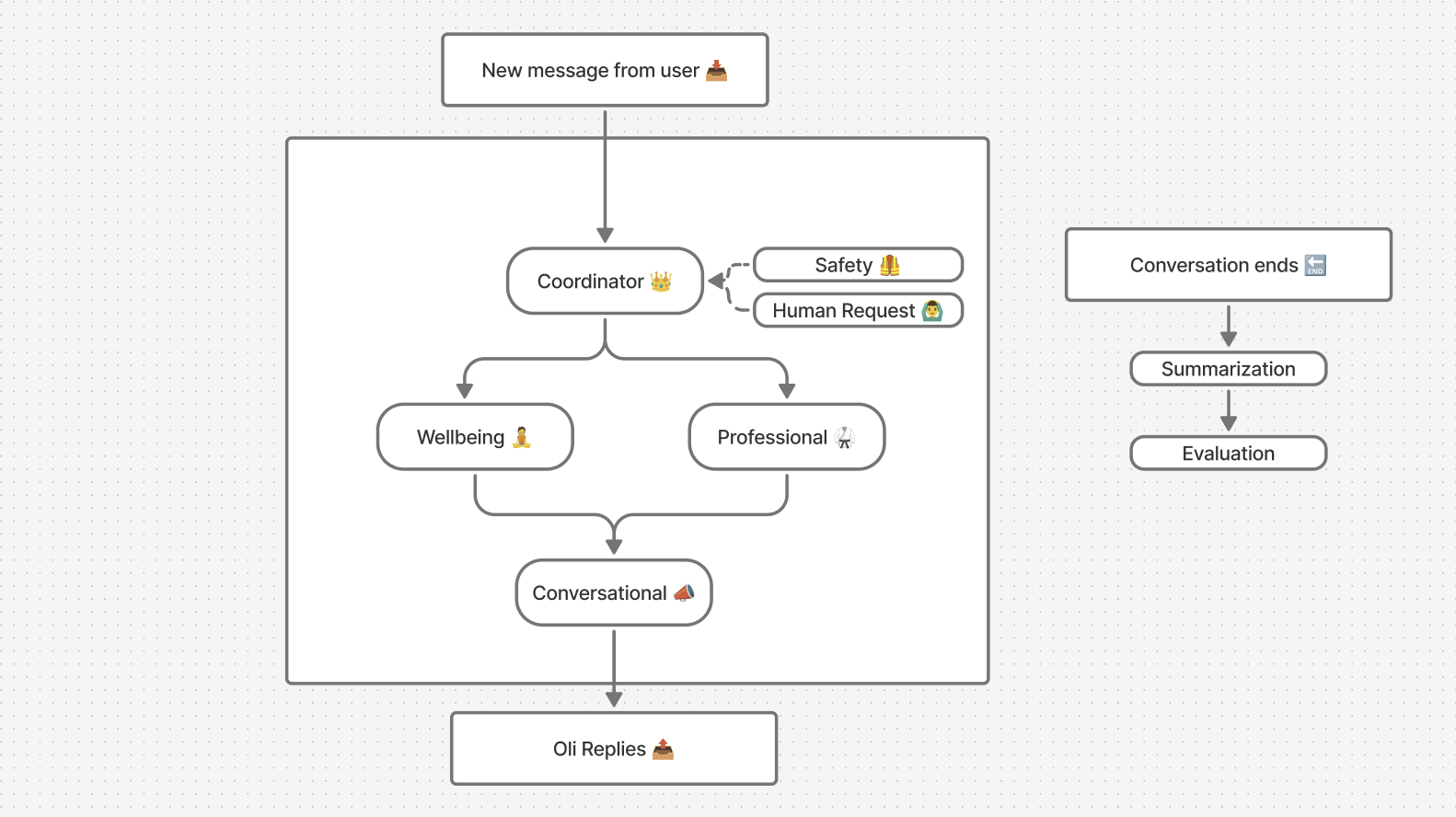

Oli isn’t a single voice or a generic chatbot. Every time you get a message from Oli, it’s actually the result of several specialist 'agents' working together behind the scenes. Each agent uses an LLM to produce a particular result: an agent is like a focused team member with one job to do, whether that’s offering advice on burnout, checking for risk, or making sure Oli sounds warm and human. Each one plays a specific role, and they’re all coordinated to give you the best possible support.

Here’s who’s involved:

- Wellbeing Coach: supports anxiety, burnout, self-esteem, relationships and more, using science-backed frameworks shaped by our behavioural experts. This agent draws on real mental health knowledge and past user interactions to offer advice that fits you, not just generic tips.

- Professional Coach: Focuses on day-to-day work topics and challenges, inspired by our live training sessions and coaching experience, helping you handle tough conversations or career decisions.

- Coordinator Agent: Acts like a manager, choosing which of the two agents is best suited for each situation and double-checking that its response is clear and helpful.

- Supervisor Agents:

- Risk Agent: Independently scans for signs of high-risk topics like self-harm and can pause the chat and guide you to real human help when needed.

- Human Handoff Agent: Checks if you might benefit from speaking with a real wellbeing or professional coach and makes it simple to book a session.

- Conversational Agent: Adjusts the final answer into Oli’s warm, caring tone so it always feels human and easy to read.

Then, once a session is finished, or after a couple of hours have passed we run two more agents to ensure that Oli’s memory stay fresh and that we have a feedback loop that helps us improve Oli with time:

- Summarisation Agent: Writes a clear recap after each chat that you can access on your conversation history. This summary is also fed to Oli and used in its memory so it has the context of your latest conversations and can use it for new conversations to continue as it would with a human coach.

- Evaluator Agent: it judges how useful or valuable a conversation was and gives it a score. It also gives suggestions on what it could be better, all anonymously so we keep improving without ever reading your private messages.

Oli’s Memory: Context Without Compromise

We mentioned above the use and relevance of context for Oli to deliver valuable advice specific to each user. This is why we have built a memory system so Oli mimics how a real coach remembers what matters. We’ve built it to store key facts and moments you’ve shared, like when you told Oli about your dog Lua for example, so your next chat picks up naturally if you mention it and you don’t have to explain who Lua is and why she´s such a good girl.

If you talk about burnout one day and come back to it a month later, Oli can continue right where you left off. We use a relational database to build this memory in a way that scales and performs fast enough to keep up with the natural pace of a human conversation. It only brings back what helps, never random or overly detailed bits that feel intrusive, so it stays caring, useful, and never creepy. This memory, as well as our own infrastructure is built and hosted on top cloud based servers in the EU.

Privacy isn’t an afterthought. It’s built in.

Everything you tell Oli is encrypted and stored securely. Your chats are private and protected by GDPR, HIPAA, and DPI standards. Your employer can’t see what you say. Ever.

We don’t use your conversations to train the AI models. And our partners (OpenAI, Google, Anthropic) are under strict contracts not to either.

The only data we use at a company level is anonymised and aggregated. That means we might see that “burnout” was a common theme across a company, but only if there are enough users to keep individual identities completely private. These anonymised insights help your company leaders understand what people need, so they can offer better support where it matters most.

How we handle high-risk conversations

Sometimes, people talk about serious issues, like self-harm or suicidal thoughts. Oli is not the right tool for those conversations, and we don’t pretend it is.

If the Risk Agent detects something high-risk, Oli pauses and checks with you first. If you confirm, it will respond with care and redirect you to crisis support services immediately. It will not continue the conversation.

We designed this to protect people, not push boundaries. We’ve seen what can go wrong when this is done badly. This was a number one priority from day one.

How we test Oli

We didn’t launch anything publicly until we’d tested it thoroughly with real people. A group of opt-in beta users volunteered to help us stress-test Oli—trying out all kinds of real-life scenarios and intentionally pushing its boundaries. They gave fast, honest feedback that helped us improve the experience quickly.

We never release new features or capabilities without putting them through this phase first. So by the time Oli gets to you, it’s already been through rigorous testing.

What’s next for Oli

We’re always improving Oli. Here’s what we’re working on now:

- Smarter conversation starters and more helpful follow-ups

- New specialist agents for specific challenges

- An "incognito mode" for one-off chats you don’t want saved

- Ways to use Oli alongside your human coach, to go deeper or stay on track

Everything we build starts with your experience in mind. We don’t have all the answers, but we’ll keep sharing how we’re building, learning, and improving along the way.

Thanks for being part of the journey.

— André, Head of Product

Questions or feedback? We’d love to hear from you. Just drop us a line at product@getoliva.health.